7.3 Hypothesis Testing

Hypothesis testing is another tool that can be used for statistical inference. Let’s warm up to the ideas of hypothesis testing by considering two broad types of scientific questions: (1) Is there a relationship? (2) What is the relationship?

Suppose that we are thinking about the relationship between housing prices and square footage. Accounting for sampling variation…

- …is there a real relationship between price and living area?

- …what is the real relationship between price and living area?

Whether by mathematical theory or bootstrapping, confidence intervals provide a range of plausible values for the true population parameter and allow us to answer both types of questions:

- Is there a real relationship between price and living area?

- Is the null value (0 for slopes, 1 for odds ratios) in the interval?

- What is the relationship between price and living area?

- Look at the estimate and the values in the interval

Hypothesis testing is a general framework for answering questions of the first type. It is a general framework for making decisions between two “theories”.

Example 1

Decide between: true support for a law = 50% vs. true support \(\neq\) 50%Example 2

In the model \(\text{Price} = \beta_0 + \beta_1\text{Living Area} + \text{Error}\), decide between \(\beta_1 = 0\) and \(\beta_1 \neq 0\).

7.3.1 Hypothesis Test Procedure

7.3.1.1 Hypotheses

In a hypothesis test, we use data to decide between two “hypotheses” about the population. These hypotheses can be described using mathematical notation and words.

- Null hypothesis (\(H_0\) = “H naught”)

- A status quo hypothesis

- In words: there is no effect/relationship/difference.

- In notation: the population parameter equals a null value, such as the slope being zero \(H_0: \beta_1 = 0\) or the odds ratio being 1, \(H_0: e^{\beta_1} = 1\).

- Hypothesis that is assumed to be true by default.

- Alternative hypothesis (\(H_A\) or \(H_1\))

- A non-status quo hypothesis

- In words: there is an effect/relationship/difference.

- In notation: the population parameter does not equal a null value, \(H_A: \beta_1 \neq 0\) or \(H_A: e^{\beta_1} \neq 1\).

- This is typically news worthy.

7.3.1.2 Test statistics

Let’s consider an example research question: Is there a relationship between house price and living area? We can try to answer that with the linear regression model below:

\[E[\text{Price} | \text{Living Area}] = \beta_0 + \beta_1\text{Living Area}\]

We would phrase our null and alternative hypotheses using mathematical notation as follows:

\[H_0: \beta_1 = 0 \qquad \text{vs.} \qquad H_A: \beta_1 \neq 0\] In words, the null hypothesis \(H_0\) describes the situation of “no relationship” because it hypothesizes that the true slope \(\beta_1\) is 0. The alternative hypothesis posits a real relationship: the true population slope \(\beta_1\) is not 0. That is, there is not no relationship. (Double negatives!)

To gather evidence, we collect data and fit a model. From the model, we can compute a test statistic, which tells us how far the observed data is from the null hypothesis. The test statistic is a discrepancy measure where large values indicate higher discrepancy with the null hypothesis, \(H_0\).

When we are studying slopes in a linear model, the test statistic is the distance from the null hypothesis value of 0 (the null value) in terms of standard errors. The test statistic for testing one slope coefficient is:

\[T= \text{Test statistic} = \frac{\text{estimate} - \text{null value}}{\text{std. error of estimate}}\]

It looks like a z-score. It expresses: how far away is our slope estimate from the null value in units of standard error? With large values (in magnitude) of the test statistic, our data (and our estimate) is discrepant with what the null hypothesis proposes because our estimate is quite far away from the null value in standard error units. A large test statistic makes us start to doubt the null value.

7.3.1.3 Accounting for Uncertainty

Test statistics are random variables! Why? Because they are based on our random sample of data. Thus, it will be helpful to understand the sampling distributions of test statistics.

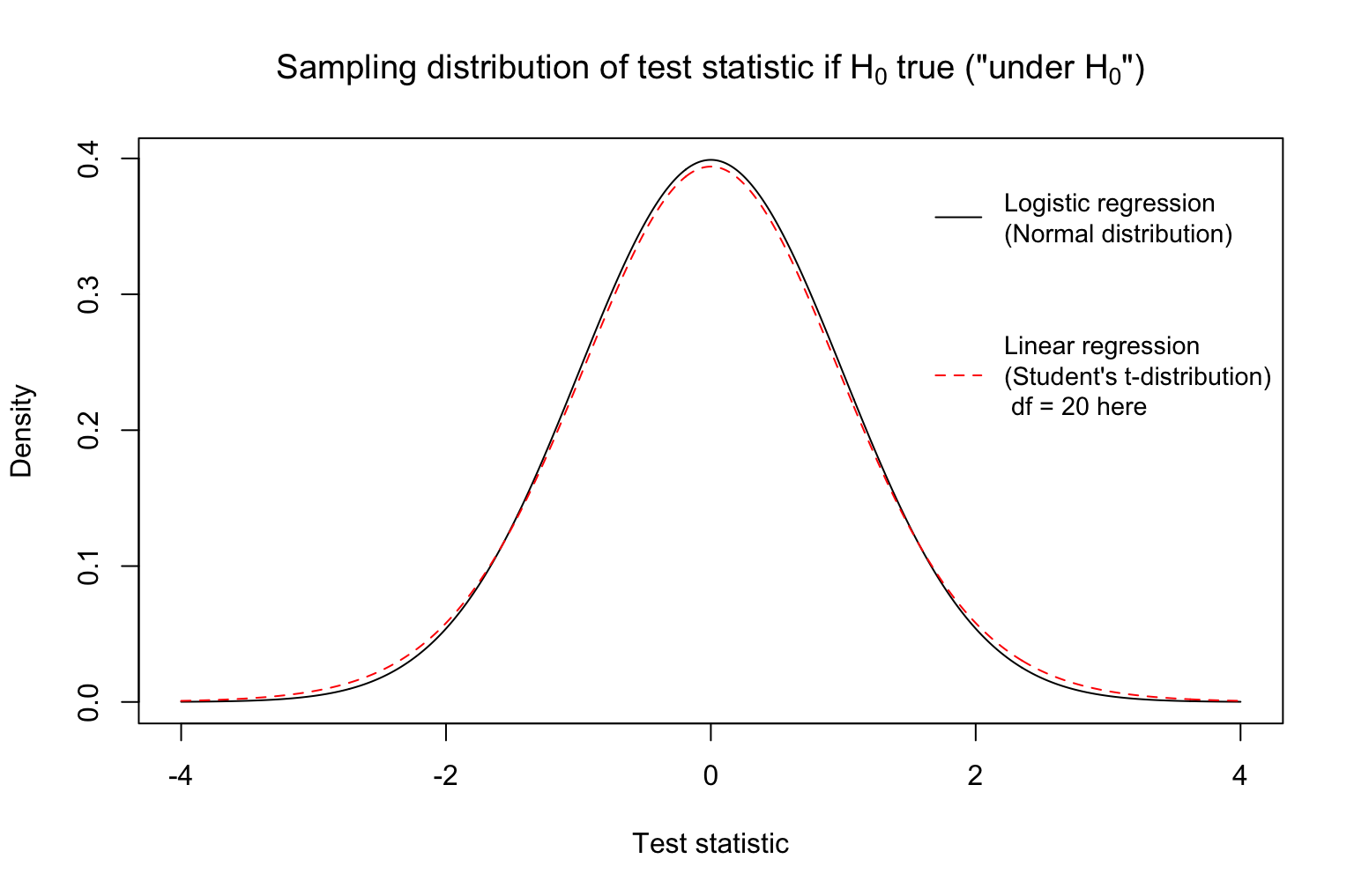

What test statistics are we likely to get if \(H_0\) is true? The distribution of the test statistic introduced above “under \(H_0\)” (that is, if \(H_0\) is true) is shown below. Note that it is centered at 0. This distribution shows that if indeed the population parameter equals the null value, there is variation in the test statistics we might obtain from random samples, but most test statistics are around zero.

It would be very unlikely for us to get a pretty large (extreme) test statistic if indeed \(H_0\) were true. Why? The density drops rapidly at more extreme values.

How large in magnitude must the test statistic be in order to make a decision between \(H_0\) and \(H_A\)? We will use another metric called a p-value. This allows us to account for the variability and randomness of our sample data.

Assuming \(H_0\) is true, we ask: What is the chance of observing a test statistic which is “as or even more extreme” than the one we just saw? This conditional probability is called a p-value, \(P(|T| \geq t_{obs} | H_0\text{ is true})\).

If our test statistic is large, then our estimate is quite far away from the null value (in standard error units), and then the chance of observing someone this large or larger (assuming \(H_0\) is true) would be very small. A large test statistic leads to a small p-value.

If our test statistic is small, then our estimate is quite close to the null value (in standard error units), and then the chance of observing someone this large or larger (assuming \(H_0\) is true) would be very large. A small test statistic leads to a large p-value.

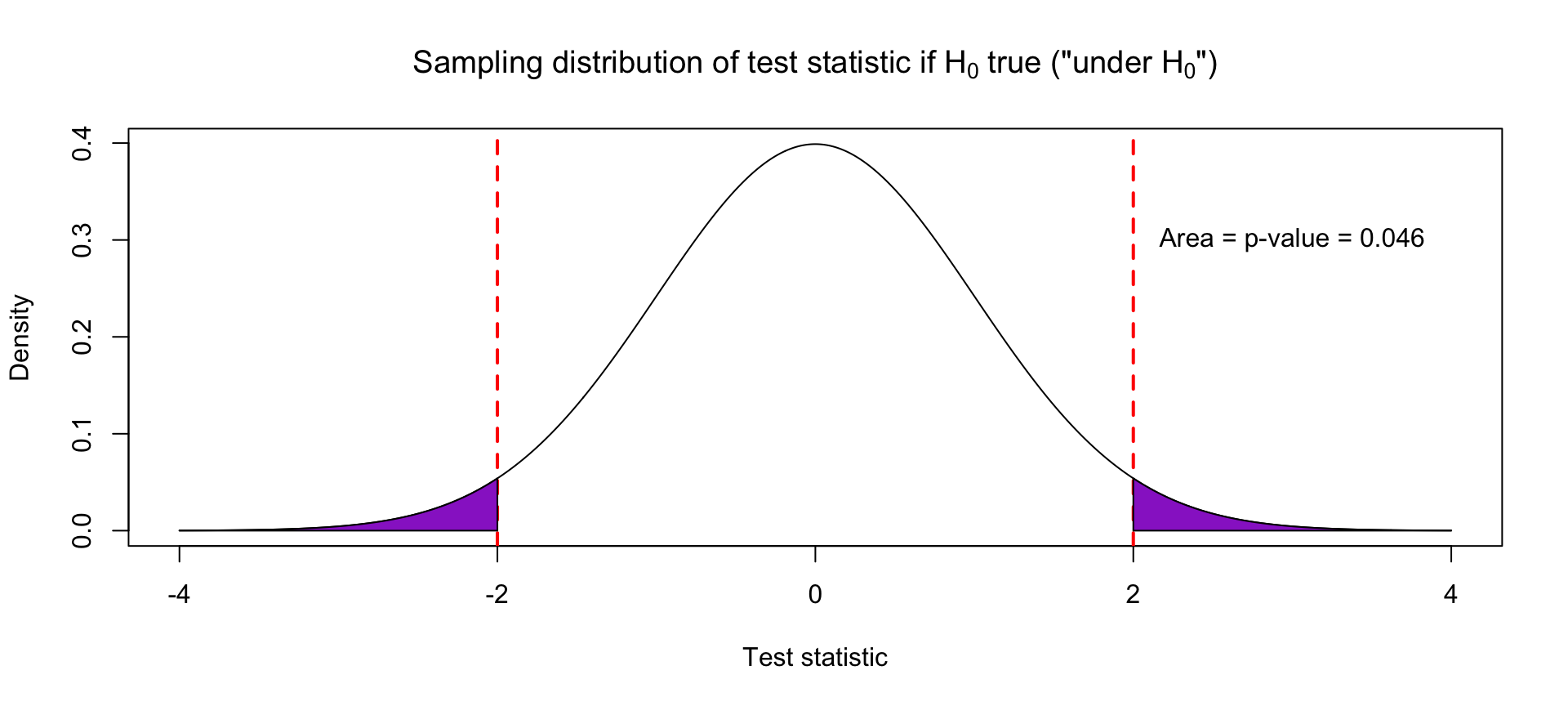

Suppose that our observed test statistic for a slope coefficient is 2. What test statistics are “as or more extreme”?

- Absolute value of test statistic is at least 2: \(|\text{Test statistic}| \geq 2\)

- In other words: \(\text{Test statistic} \geq 2\) or \(\text{Test statistic} \leq -2\)

The p-value is the area under the curve of the probability density function in those “as or more extreme” regions.

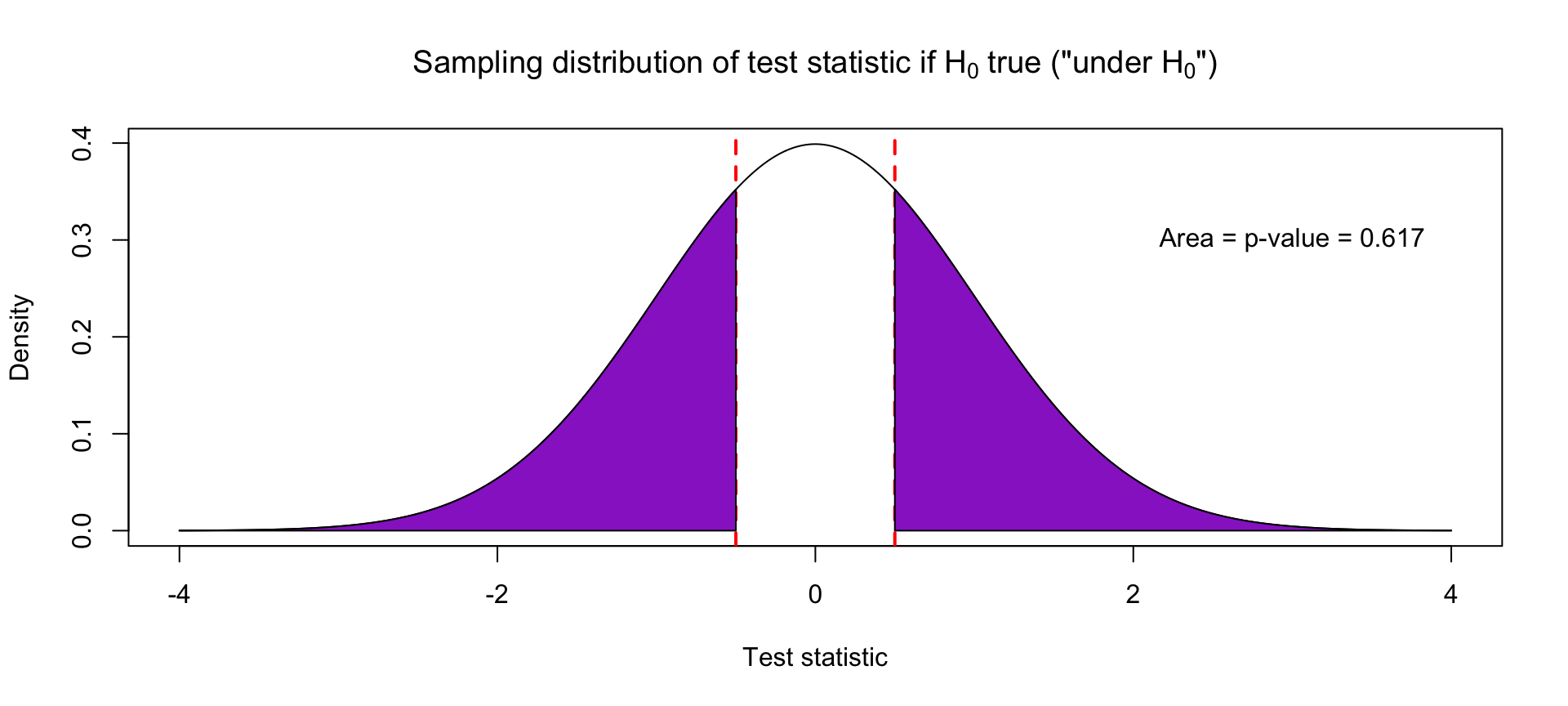

Suppose the test statistic for a slope coefficient is -0.5. This means that the estimated slope is half of a standard error away from 0, which indicates no relationship. This is not the far and happens quite frequently, about 62% of the time, when the true slope is actually 0.

7.3.1.4 Making Decisions

If the p-value is “small”, then we reject \(H_0\) in favor of \(H_A\). Why? A small p-value (by definition) says that if the null hypotheses were indeed true, we are unlikely to have seen such an extreme discrepancy measure (test statistic). We made an assumption that the null is true, and operating under that assumption, we observed something odd and unusual. This makes us reconsider our null hypothesis.

How small is small enough for a p-value? We will set a threshold \(\alpha\) ahead of time, before looking at the data. P-values less than this threshold will be “small enough”. When we talk about errors of the decisions associated with rejecting or not rejecting the null hypothesis, the meaning of \(\alpha\) will become more clear.

7.3.1.5 Procedure Summary

- State hypotheses \(H_0\) and \(H_A\).

- Select \(\alpha\), a threshold for what is considered to be a small enough p-value.

- Calculate a test statistic.

- Calculate the corresponding p-value.

- Make a decision:

- If p-value \(\leq\alpha\), reject \(H_0\) in favor of \(H_A\).

- Otherwise, we fail to reject \(H_0\) for lack of evidence.

(If this helps to remember: U.S. jurors’ decisions are “guilty” and “not guilty”. Not “guilty” and “innocent”.)

7.3.2 Hypothesis Testing Errors

Just as with model predictions, we may make errors when doing hypothesis tests.

We may decide to reject \(H_0\) when it is actually true. We may decide to not reject \(H_0\) when it is actually false.

We give these two types of errors names.

Type 1 Error is when you reject \(H_0\) when it is actually true. This is a false positive because you are concluding there is a real relationship when there is none. This would happen if one study published that coffee causes cancer in one group of people, but no one else could actually replicate that result since coffee doesn’t actually cause cancer.

Type 2 Error is when you don’t reject \(H_0\) when it is actually false. This is a false negative because you would conclude there is no real relationship when there is a real relationship. This happens when our sample size is not large enough to detect the real relationship due to the large amount of noise due to sampling variability.

We care about both of these types of errors. Sometimes we prioritize one over the other. Based on the framework presented, we control the chance of a Type 1 error through the confidence level/p-value threshold we used. In fact, the chance of a Type 1 Error is \(\alpha\),

\[P(\text{ Type 1 Error }) = P(\text{ Reject }H_0 ~|~H_0\text{ is true} ) = \alpha\]

Let \(\alpha = 0.05\) for a moment. If the null hypothesis (\(H_0\)) is actually true, then about 5% of the time, we’d get unusual test statistics due to random sample data. With those samples, we would incorrectly conclude that there was a real relationship.

The chance of a Type 2 Error is often notated as \(\beta\) (but this is not the same value as the slope),

\[P(\text{ Type 2 Error }) = P(\text{ Fail to Reject }H_0 ~|~H_0\text{ is false} ) = \beta\]

We control the chance of a Type 2 error when choosing the sample size. With a larger sample size \(n\), we will be able to more accurately detect real relationships. The power of a test, the ability to detect real relationships, is \(P(\text{Reject }H_0 ~|~H_0\text{ is false}) = 1 - \beta\). In order to calculate these two probabilities, we’d need to know the value (or at least a good idea) of the true effect.

To recap,

- If we lower \(\alpha\), the threshold we use to determine the p-value is small enough to reject \(H_0\), we can reduce the chance of a Type 1 Error.

- Lowering \(\alpha\) makes it harder to reject \(H_0\), thus we might have a higher chance of a Type 2 Error.

- Another way we can increase the power and thus decrease the chance of a Type 2 Error is to increase the sample size.

7.3.3 Statistical Significance v. Practical Significance

The common underlying question that we ask as Statisticians is “Is there a real relationship in the population?”

We can use confidence intervals or hypothesis testing to help us answer this question.

If we note that the no relationship value is NOT in the confidence interval or the p-value is less then \(\alpha\), we can say that there is significant evidence to suggest that there is a real relationship. We can conclude there is a statistically significant relationship because the relationship we observed it is unlikely be due only to sampling variabliliy.

But as we discussed in class, there are two ways you can control the width of a confidence interval. If we increase the sample size \(n\), the standard error decreases and thus decreasing the width of the interval. If we decrease our confidence level (increase \(\alpha\)), then we decrease the width of the interval.

A relationship is practically significant if the estimated effect is large enough to impact real life decisions. For example, an Internet company may run a study on website design. Since data on observed clicks is fairly cheap to obtain, their sample size is 1 million people (!). With large data sets, we will conclude almost every relationship is statistically significant because the variability will be incredibly small. That doesn’t mean we should always change the website design. How large of an impact did the size of the font make on user behavior? That depends on the business model. On the other hand, in-person human studies are expensive to run and sample sizes tended to be in the 100’s. There may be a true relationship but we can’t distinguish the “signal” from the “noise” due to the higher levels of sampling variability. While we may not always have statistical significance, the estimated effect is important to consider when designing the next study.

Hypothesis tests are useful in determining statistical significance (Answering: “Is there a relationship?”).

Confidence intervals are more useful in determining practical significance (Answering: “What is the relationship?”)

Recommended Readings about P-values and Limitations of Hypothesis Testing:

7.3.4 Hypotheses involving more than one coefficient

So far we’ve considered hypothesis tests involving a single regression coefficient. However, when we are interested in testing whether the relationship between our outcome and more than one predictor is simultaneously statistically significant, we need a re-frame our hypothesis tests in terms of multiple coefficients.

Suppose, for example, we are interested in the association between the sepal length of an iris and the species type of that iris (setosa, versicolor, or virginica). We can fit a simple linear regression model with species type as a multi-level categorical predictor, as follows:

\[ E[\text{Sepal.Length} \mid \text{Species}] = \beta_0 + \beta_1 \text{Versicolor} + \beta_2 \text{Virginica} \]

Note that neither \(\beta_1\) nor \(\beta_2\) alone correspond to the relationship between species type and sepal length! They correspond to the difference in expected sepal length, comparing each of those species to the reference group (the setosa species) separately.

If species and sepal length were truly unrelated, the null hypothesis would involve both of these coefficients. We can write our null and alternative hypotheses as:

\[ H_0: \beta_1, \beta_2 = 0 \quad \text{vs.} \quad H_1: \text{At least one of } \beta_1, \beta_2 \neq 0 \]

Here, the null hypothesis described the situation of “no relationship” because it hypothesizes that the differences in outcome between all groups defined by out multi-level categorical predictor are truly \(0\). The alternative posits that there is some difference in outcome between at least one of the groups.

A null hypothesis where more than one coefficient is set equal to zero requires what is referred to as an F-test.

7.3.4.1 Nested Models

Nested models are one such case where F-tests can be used to directly compare the larger model and the smaller nested model. If you’ve removed one or more variables from a model, the result is a smaller nested model. An example of two models that are nested would be:

\[ E[Y \mid A, B, C] = \beta_0 + \beta_1 A + \beta_2 B + \beta_3 C \] and the smaller model

\[ E[Y \mid A] = \beta_0 + \beta_1 A \]

The F-test that compares the larger model to the smaller model in this case determines whether or not the relationship between \(Y\) and both \(B\) and \(C\) is statistically significant, adjusting for \(A\). In symbols, our null and alternative hypotheses comparing these models can be written as

\[ H_0: \beta_2, \beta_3 = 0 \quad \text{vs.} \quad H_A: \text{At least one of }\beta_2, \beta_3 \neq 0 \]

The test statistic for this nested test compares the smaller model to the larger, full model. For a linear regression model, this is sometimes referred to as a nested F-test, and the test statistic is a ratio comparing the sum of squared residuals of either model (F \(\sim\) 1 if \(H_0\) is true). For logistic regression, this nested test is called a likelihood ratio test and the test statistic is a ratio comparing the likelihood or “goodness” of a model (\(\chi \sim 1\) if \(H_0\) is true). The larger the test statistic, the bigger the difference is between the small modeler model and the larger model.

For this reason, F-tests are sometimes used as a metric for doing model selection/comparison. More details on this application of F-tests are given in Section 7.4.

7.3.4.2 F-Tests in R

There are two primary ways to conduct F-tests in R that we will cover in this course.

The first you already know how to do (surprise!), and the second will use the anova function.

If you are familiar with the term “Analysis of Variance”, you may have heard of the anova function already!

In this case, we will use the function primarily because it makes it quite simple to conduct nested F-tests.

Recall our example from above, where we were interested in the relationship between sepal length of irises and species type. Our null hypothesis was that the coefficients for both the versicolor species, \(\beta_1\), and the virginica species, \(\beta_2\) were equal to zero. Note that if our null hypothesis is true, our linear regression model simplifies to:

\[ \begin{aligned} E[\text{Sepal.Length} \mid \text{Species}] & = \beta_0 + \beta_1 \text{Versicolor} + \beta_2 \text{Virginica} \\ & = \beta_0 + \beta_1 * 0 + \beta_2 * 0 \\ & = \beta_0 \end{aligned} \]

The above model is sometimes referred to as a “constant-only” or “intercept-only” model.

The summary output from lm (or glm, if you were doing logistic regression) provides an overall F-statistic and corresponding p-value for the hypothesis test where \(H_0\) is such that all regression coefficients in your model are equal to zero.

##

## Call:

## lm(formula = Sepal.Length ~ Species, data = iris)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.6880 -0.3285 -0.0060 0.3120 1.3120

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 5.0060 0.0728 68.762 < 2e-16 ***

## Speciesversicolor 0.9300 0.1030 9.033 8.77e-16 ***

## Speciesvirginica 1.5820 0.1030 15.366 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.5148 on 147 degrees of freedom

## Multiple R-squared: 0.6187, Adjusted R-squared: 0.6135

## F-statistic: 119.3 on 2 and 147 DF, p-value: < 2.2e-16From the output above, the F-statistic is 119.3, with a corresponding p-value \(< 2.2 \times 10^{-16}\). From this hypothesis test, we would conclude that there is a statistically significant association between species and sepal length of irises, at a 0.05 significant level.

If the null hypothesis involves more than a single regression coefficient being set to zero, and there are additional covariates in our model (such that the “null” model is not an intercept-only model), we need to use the anova function in R to conduct our F-test.

Linear Regression Example: Below we fit a larger linear regression model of house price using living area, the number of bedrooms, and the number of bathrooms, and compare it to a model of house price using only living area as a predictor:

homes <- read.delim("http://sites.williams.edu/rdeveaux/files/2014/09/Saratoga.txt")

mod_homes <- homes %>%

with(lm(Price ~ Living.Area))

mod_homes_full <- homes %>%

with(lm(Price ~ Living.Area + Bedrooms + Bathrooms))

anova(mod_homes,mod_homes_full)## Analysis of Variance Table

##

## Model 1: Price ~ Living.Area

## Model 2: Price ~ Living.Area + Bedrooms + Bathrooms

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 1726 8.2424e+12

## 2 1724 7.8671e+12 2 3.7534e+11 41.126 < 2.2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Notice that we get the smaller nested model by removing Bedrooms and Bathrooms from the larger model.

This means that they are nested and can be compared using the nested F-test.

What are \(H_0\) and \(H_A\), in words? \(H_0\): The number of Bathrooms and Bedrooms does not have an impact on average price after accounting for living area. \(H_A\): The number of Bathrooms or Bedrooms does have an impact on average price after accounting for living area.

What is the test statistic? The F = 41.126 is the test statistic. Unlike the test for slopes, this test statistic does not have a nice interpretation. It seems quite far from 1 (which is what F should be close to if \(H_0\) were true), but there are no rules for “how far is far”.

For a threshold \(\alpha = 0.05\), what is the decision regarding \(H_0\)? The p-value of \(< 2.2*10^{-16}\) is well below the threshold suggesting that it is very unlikely to have observed a difference between these two models as big as we did if the smaller model were true. Thus, we reject \(H_0\) in favor of the larger model.

Logistic Regression Example: Below we fit a larger logistic regression model of whether a movie made a profit (response) based on whether it is a history, drama, comedy, or a family film, compared to a model with only history as a predictor:

movies <- read.csv("https://www.dropbox.com/s/73ad25v1epe0vpd/tmdb_movies.csv?dl=1")

mod_movies <- movies %>%

with(glm( profit ~ History, family = "binomial"))

mod_movies_full <- movies %>%

with(glm( profit ~ History + Drama + Comedy + Family, family = "binomial"))

anova(mod_movies,mod_movies_full,test='LRT') #test='LRT' is needed for logistic models## Analysis of Deviance Table

##

## Model 1: profit ~ History

## Model 2: profit ~ History + Drama + Comedy + Family

## Resid. Df Resid. Dev Df Deviance Pr(>Chi)

## 1 4801 6630.3

## 2 4798 6555.3 3 74.941 3.729e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Notice that we get the smaller nested model by removing Drama, Comedy, and Family from the larger model.

This means that they are nested and can be compared using the nested test.

What are \(H_0\) and \(H_A\)? \(H_0\): Indicators of Drama, Comedy, and Family do not have an impact on whether a movie makes a profit, after accounting for an indicator of History. \(H_A\): Indicators of Drama, Comedy, or Family do have an impact on whether a movie makes a profit, after accounting for an indicator of History.

What is the test statistic? The Deviance = 74.941 is the test statistic. Unlike the test for slopes, this test statistic does not have a nice interpretation. It seems quite far from 1 (which is it should be close to if \(H_0\) were true), but there are no rules for “how far is far”.

For a threshold \(\alpha = 0.05\), what is the decision regarding \(H_0\)? The p-value of \(3.729 * 10^{-16}\) is well below the threshold suggesting that it is very unlikely to have observed a difference between these two models as big as we did if the smaller model were true. Thus, we reject \(H_0\) in favor of the larger model.